Introduction

Imagine two very different machine-learning systems solving societal problems. One is a rigorously pre-trained foundation model, trained on carefully curated scientific corpora, optimized for generalization and deep representation learning across mathematics, biology and computation. The other is a composite of many fine-tuned expert models that power visible downstream applications: museums, university departments, prize mechanisms and media platforms, each with a public UI and a brand.

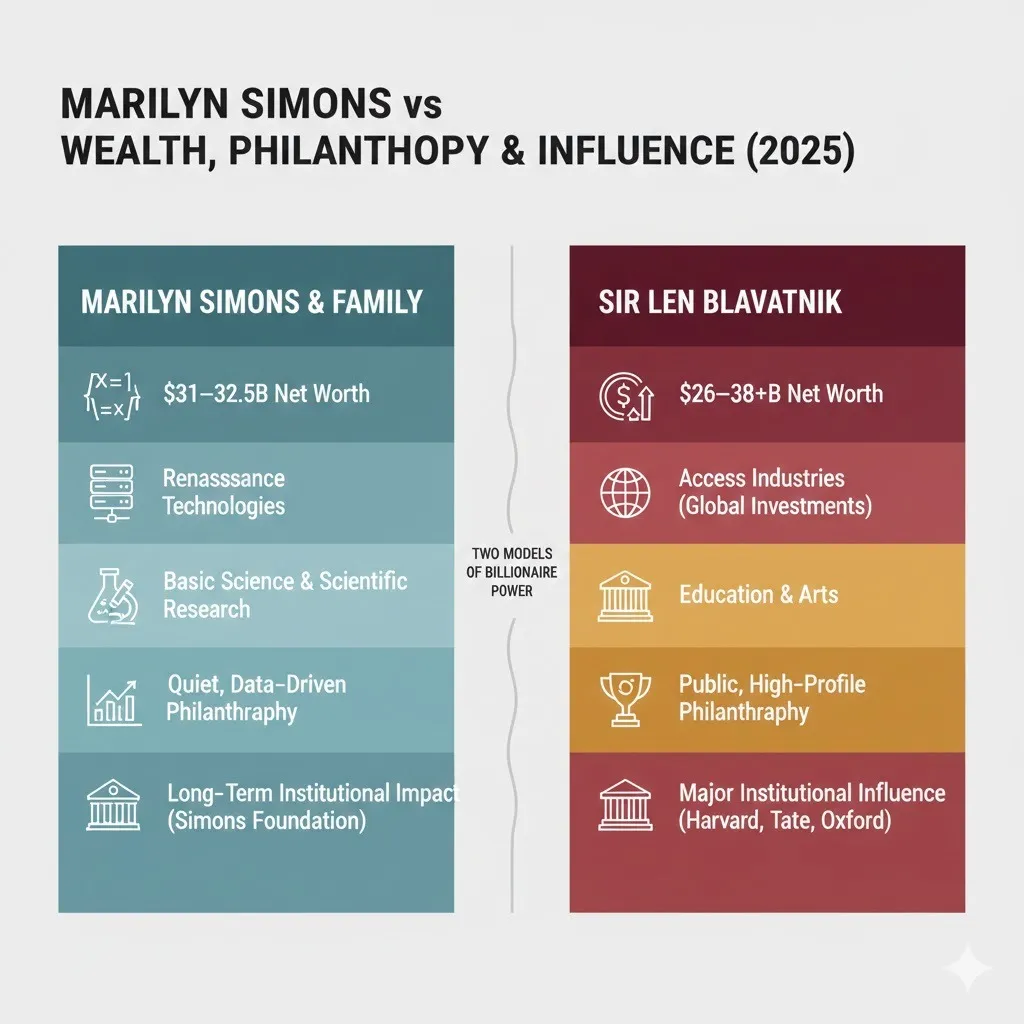

Marilyn Simons & Family and Sir Leonard (Len) Blavatnik are the real-world embodiments of those two architectures.

The Simons family behaves like a foundation-model developer for basic science: they underwrite datasets, computational infrastructure, algorithmic work (the Flatiron Institute), and long-horizon pretraining that improves the collective “weights” of science itself. Their interventions tend to be latent, producing long-term improvements to the collective representation space of human knowledge.

Len Blavatnik, by contrast, behaves like an institutional systems integrator and product CEO: he injects capital into visible institutions, purchases naming rights, structuring incentives that immediately change public outputs new buildings, endowed chairs, awards that shift reputational rankings. His investments are like deploying high-impact fine-tunes and product launches that alter what the public sees and praises.

This guide reframes their biographies, philanthropic strategies, controversies, and legacies in natural language processing (NLP) terms mapping donor intent to model design choices, evaluation metrics, governance protocols, and societal trade-offs. The goal is not mere metaphor; it is to help readers reason about how concentrated financial resources change knowledge production, institutional incentives, and cultural attention using a conceptual language familiar to researchers and technologists.

Quick Facts

| Category | Marilyn Simons & Family | Sir Len Blavatnik |

| Full Name | Marilyn H. Simons (née Hawrys) | Sir Leonard Valentinovich Blavatnik |

| Birth Year | Public records limited (1940s–50s est.) | June 14, 1957 |

| Age (2026) | Estimated mid-70s to early-80s | 68 |

| Birthplace | United States | Odesa, Ukrainian SSR (now Ukraine) |

| Nationality | American | British & American |

| Wealth Source | Renaissance Technologies (quant hedge fund) algorithmic trading engine | Access Industries diversified private holdings (chemicals, media, investments) |

| Estimated Net Worth (2026) | $31–32.5 billion (est.) | $26–38+ billion (est.) |

| Philanthropy Focus | Basic science, mathematics, computational infrastructure, STEM education | Education, arts, culture, awards, institutional naming |

| Public Visibility | Low (background contributor) | High (public benefactor; naming rights) |

Models & Architectures: Simons as Foundation Model, Blavatnik as Fine-Tune/Deployment Suite

Simons: Pretraining for Science

Think of the Simons approach as building high-quality embeddings for science. The Simons Foundation invests in:

- Data curation: Funding experimental infrastructure, open datasets, and repositories that serve as training corpora for future research.

- Compute & Institutes: The Flatiron Institute functions like a compute cluster and specialized model lab enabling computational biology, astrophysics, and applied math.

- Fundamental research: Like unsupervised pretraining, basic math and theory improve model generalization for many downstream tasks.

This architecture favors transferability and robust representation learning. A heavy investment in foundational resources increases the base-rate probability that future scientific “models” (researchers, labs) will solve previously intractable problems.

Key attributes:

- Long training horizon — Results may show only after years or decades.

- Low retargeting — Grants are often non-prescriptive, enabling exploratory work.

- Peer-reviewed evaluation — Success measured by citations, theorems, datasets, and method adoption.

Blavatnik: Fine-tuning, Interfaces & Institutional Productization

Blavatnik’s model resembles a strategy for launching outward-facing products: endowed schools, named galleries, awards that generate immediate attention signals.

Core mechanics

- Fine-tuning for public impact — Capital is applied to existing institutions, shifting their output distribution (more exhibitions, programs, media coverage).

- Branding & UI — Naming rights function like user-interface changes that influence user perception and trust.

- Reputational transfer learning — Attaching a donor’s name to an institution transfers social capital and redirects external funding flows.

Key attributes

- High visibility & fast signal — Immediate public and media metrics (attendance, brand recognition).

- Strategic targeting — Investments often include conditions or explicit goals for reputation enhancement.

- Public interface evaluation — Success measured by engagement metrics, headlines, and rankings.

Training Data & Datasets Where each donor invests

In ML, the quality and diversity of training data can be decisive. Philanthropy parallels this: dollars go to either producing new raw data and infrastructure (Simons) or reshaping how existing institutions curate and present data (Blavatnik).

Simons family dataset creation and stewardship

- Funding long-term cohort studies, computational biology datasets, and mathematical collaborations.

- Investing in reproducibility infrastructure and open toolchains that benefit many downstream labs.

- Supporting teacher pipelines (Math for America) to improve future data generation quality (better researchers, better experiments).

Blavatnik dataset curation through institutions

- Endowing university centers that curate archives, exhibitions, and award datasets.

- Funding prizes (Blavatnik Awards) that create labeled datasets of high-impact researchers for publicity and benchmarking.

- Shaping curricula and programs that produce more public outputs (exhibitions, reports) to be consumed by media and policymakers.

Objectives & Loss Functions What each donor optimizes for

In optimization terms, the Simons family optimizes for Scientific Loss Reductions: lowering prediction error across many scientific subdomains by improving data, talent, and infrastructure. Their loss function is diffuse, often measurable only through long-term performance gains.

Blavatnik optimizes for visibility/engagement utility: maximizing attention, prestige metrics, and institutional rankings. His objective function is more immediate and often includes constraints related to branding and public recognition.

Both objectives are legitimate but differ in latency, measurement, and societal signal types.

Regularization, Constraints & Governance

In machine learning, regularization prevents overfitting and improves generalization. Philanthropic governance plays a similar role: structures for oversight, transparency, and accountability regularize donor influence.

- Simons model: Often built around peer review, independent scientific advisory boards, and arms-length grantmaking. This acts like L2 regularization; it reduces variance in outcomes by privileging rigorous, community-driven evaluation.

- Blavatnik model: Sometimes uses explicit contracts, naming agreements, and board seats. This is akin to constrained optimization high impact/visibility but with stronger directional bias and potential for overfitting public institutions to donor priorities.

Transparent governance and checks (audits, conflict-of-interest rules, public reporting) are the social equivalent of interpretability tools and model cards.

Interpretability & Explainability Why scholars care who funds what

Explainable AI reminds stakeholders why a model made its prediction; similarly, society wants to know how philanthropic funds influence knowledge flows and public culture.

- Black-box philanthropy (anonymous or low-profile giving) reduces the risk of public bias but can create questions about accountability.

- High-visibility philanthropy clarifies the donor’s intent but can create feedback loops where public institutions prioritize projects likely to attract future funding rather than projects that maximize epistemic value.

From an NLP lens, explainability requires documentation: public grant databases, conflict-of-interest disclosures, and transparent terms analogous to model cards and datasheets.

Robustness & Adversarial Examples Controversies framed as attacks

Every concentrated source of funding introduces potential adversarial vulnerabilities.

- Blavatnik: Public prominence invites adversarial scrutiny about political donations, source of wealth, and the potential for donor preferences to bias institutional priorities. These are like targeted attacks exposing model weakness and misalignment.

- Simons family: Lower visibility reduces immediate attack surface but can still lead to questions about concentration of influence in a small network of advisors or opaque allocation heuristics. Lack of public signal can be perceived as low accountability, a different form of adversarial risk.

Robust philanthropic systems need stress tests: independent audits, diverse funder ecosystems, and competition to prevent single points of failure.

Evaluation Metrics Scientific impact vs. public engagement

Choosing metrics shapes behavior. Two broad classes of metrics track donor success.

1: Scientific metrics (Simons):

- Citation impact, field-changing discoveries, data release frequency, reproducibility indices, software adoption rates.

- Slow, noisy, but aligned with long-term knowledge accumulation.

2: Public metrics (Blavatnik):

- Attendance, media mentions, endowed chairs created, prizes awarded, brand value, fundraising leverage.

- Fast, visible, and politically salient.

Both metric families have pros and cons. Over-optimizing for either can produce pathologies: publish-or-perish incentives in science, or prestige chasing in public institutions.

Case Studies: Institutional Interventions as Model Updates

Flatiron Institute & Simons investments Upgrading the scientific base model

- Flatiron acts like a dedicated research lab that builds specialized models (computational chemistry, biology, astrophysics).

- Investments in software, compute, and data are analogous to expanding model capacity and training duration.

- Returns: improved capacity for researchers to discover patterns, publish methods, and build tools that others fine-tune.

Blavatnik School donations & Blavatnik Awards Fine-tunes that change public priors

- A large named school (e.g., Blavatnik School of Government) is a large, visible layer inserted into the public architecture, influencing policy discourse and graduate training.

- Blavatnik Awards function as a prominent evaluation benchmark for “young scientist excellence” they reweight academic attention and provide social reinforcement signals that influence career trajectories.

Liquidity, Portfolios & Stability Wealth as capital versus model resources

From a financial ML perspective, wealth can be considered capital that pays for compute, data, and talent. The Simons family’s fortune is heavily tied to Renaissance Technologies, a stable but internally complex algorithmic engine producing predictable cash flows. That yields a relatively stable funding stream for long-lived institutions.

Blavatnik’s wealth, distributed across industries and markets, is more sensitive to economic cycles and asset repricing like ensembles whose aggregate performance depends on many noisy submodels. This creates flexibility but also volatility.

Implications for philanthropy:

- Stable funding favors long-term, infrastructure projects (data centers, endowments).

- Variable funding can support opportunistic, high-impact injections but may need contingency planning.

Legacy & Long-Horizon Generalization

Models are judged by their ability to generalize to new inputs. Philanthropic legacies are judged similarly: did the intervention improve society’s ability to solve future problems?

- Simon’s legacy: embedding long-term improvements in the scientific base, better datasets, research institutes, and talent pipelines increases the generalizability of human knowledge across domains.

- Blavatnik legacy: reconfiguring institutional landscapes (endowed schools, museums, awards) alters cultural priorities and public-facing capabilities which can accelerate public uptake of certain disciplines and ideas.

Both types of legacy change the hypothesis space available to future researchers and leaders.

Public Perception, Reputation Systems & Feedback Loops

Publicly visible donors produce rapid reputational feedback. Media, scholars, and civil society react, and institutions respond by changing priorities to capture future funding. This feedback loop is akin to online reinforcement learning where actions (donations) change the reward function (prestige), which in turn shapes future actions (institutional programming).

Simons’ quieter approach produces slower feedback loops but can generate durable improvements with less susceptibility to performative cycles. Blavatnik’s high-visibility approach changes public attention quickly but can also produce oscillations driven by media cycles.

Ethical Considerations Bias, Access, and Equity

In machine learning, bias can emerge from unrepresentative training data. Philanthropy can likewise introduce or reduce societal biases.

- Investments in fundamental science (Simons) tend to be accessible to global research communities via open datasets and tools, reducing certain access barriers. However, if funding is concentrated within elite networks, it can reproduce elite capture.

- Institutional gifts that confer naming rights (Blavatnik) can concentrate symbolic capital in specific institutions, sometimes increasing access but sometimes reinforcing prestige hierarchies.

Ethical governance requires attention to distributive outcomes: who benefits from funding, and are selection mechanisms equitable?

Risk Management & Institutional Resilience

Robust institutions anticipate funding shocks, governance transitions, and public scrutiny.

- Endowment structures, diversified funding sources, and transparent governance increase resilience.

- Philanthropists who push for immediate impact should also fund contingency and oversight budgets to prevent mission drift caused by reputational pressures.

From an NLP standpoint, think of it as ensuring model robustness under domain shift and adversarial input.

Lessons for Donors, Institutions & Leaders

- Invest in quality data: High-quality datasets and open infrastructure compound across generations of research treat them like permanent training corpora.

- Balance pretraining and fine-tuning: Combine long-term infrastructure support with targeted public programs to get both deep and immediate impact.

- Publish model cards: Donors and institutions should publish clear grant terms, evaluation metrics, and governance rules — the philanthropic equivalent of model cards.

- Avoid single-source dependence: Diversify funding to prevent overfitting institutional priorities to a single donor’s objectives.

- Design fair evaluation metrics: Use mixed metrics (scientific rigor + public utility) to stabilize incentives.

- Enable interpretability: Require transparency and independent review for large gifts, especially those that include naming rights or governance clauses.

- Fund resilience: Provide resources for oversight, audits, and contingency the regularizers of institutional health.

Timeline: Key Events

| Year | Simons Family (signal events) | Len Blavatnik (signal events) |

| 1957 | — | Born in Odesa |

| 1978 | Jim Simons founds Renaissance (signal: algorithmic engine operational) | — |

| 1994 | Simons Foundation founded (training corpus & compute investments begin) | — |

| 2017 | — | Knighted (public signal; reputation update) |

| 2023 | $500M gift to Stony Brook University (capacity building; infrastructure injection) | Continued major public giving |

| 2024 | Jim Simons dies (model steward change event) | — |

These are anchor points; the full story includes many smaller updates (grants, datasets launched, awards made) that alter the “weights” of academic fields and public institutions.

Head-to-Head Comparison Table

| Category | Simons Family (Foundation-model) | Len Blavatnik (Product/Deployment suite) |

| Giving Style | Quiet, research-driven pretraining | Public, institution-focused fine-tuning |

| Main Impact Area | Science & mathematics (representation learning) | Culture, education, prizes (interface & UX) |

| Measurement | Slow, citation & adoption based | Fast, engagement & prestige based |

| Visibility | Low | High |

| Governance tendency | Peer review & distributed grants | Contractual, naming rights & explicit conditions |

| Controversy profile | Minimal public scrutiny | Periodic scrutiny and debate |

Public Perception & Controversies

Simons Family

- Perception: highly respected within academia; recognized for strengthening research infrastructure.

- Controversy: relatively low public controversy; most critique centers on concentration of resources in elite institutions (a systemic issue rather than donor-specific).

Len Blavatnik

- Perception: lauded for generous public gifts and cultural patronage.

- Controversy: high profile invites criticism questions about political donations, the ethics of naming, and the concentration of influence. These are analogous to concerns about opaque training datasets and undisclosed optimization objectives in ML systems.

FAQs

A: As of 2026, public estimates generally place the Simons family slightly ahead in aggregate net worth, though both are within a similar order of magnitude. Net worth estimates for both vary due to private holdings, valuation techniques, and market sensitivity; treat these as rough, model-based estimates with confidence intervals rather than precise point estimates.

A: The Simons family focuses more heavily on basic science, mathematics, computational research, and long-term research infrastructure; their portfolio is heavily skewed toward science funding. Blavatnik also funds scientific and educational programs, but his public footprint emphasizes museums, cultural institutions, policy schools, and awards, making his impact more visible in public culture.

A: Blavatnik’s giving tends to be high-visibility and institution-shaping, including naming rights and connections to political donations; these features attract scrutiny about donor influence and the provenance of wealth. High visibility acts like a public audit that exposes the gift to political and ethical questions.

A: There is no single “better” model. Each addresses different failure modes: Simons-style funding reduces epistemic risk and builds research capacity; Blavatnik-style funding accelerates institutional capabilities and public engagement. Optimal societal outcomes typically combine both approaches stable investment in knowledge infrastructure plus public-facing programs that translate discoveries into social value.

Conclusion

Marilyn Simons & family and Sir Len Blavatnik exemplify two complementary approaches to concentrated philanthropic capital. One builds foundational capacity for knowledge extraction (pretraining); the other amplifies public institutions and reputational signals (deployment and fine-tuning). Both reshape the hypothesis space available to future researchers, Leaders, and cultural producers.

From an NLP perspective, healthy scientific and cultural ecosystems require both kinds of investments: deep pretraining to improve the underlying representation of knowledge, and well-designed product launches to ensure discovery reaches people and policy. Governance, transparency, diversity of funders, and robust evaluation metrics are the regulatory layers that keep those systems aligned with public values.