Introduction

Alexander (Alex) Caedmon Karp is an unusual figure in modern technology: a CEO whose intellectual formation comes from philosophy, law, and social theory rather than computer science. He co-founded Palantir Technologies, a company that builds platforms for integrating messy data into operational decision-making. Reading Karp through the lens of natural language processing (NLP) and data science gives us fresh analytic terms for understanding his decisions, Palantir’s products, and the ethical debates that surround both.

This piece reframes Karp’s life and Palantir’s trajectory in the vocabulary of NLP and information systems. It explains how concepts like information extraction, entity resolution, knowledge graphs, vector embeddings, human-in-the-loop learning, explainability, and model governance help decode Palantir’s mission and controversies. The FAQ questions from your original article remain intact and unchanged.

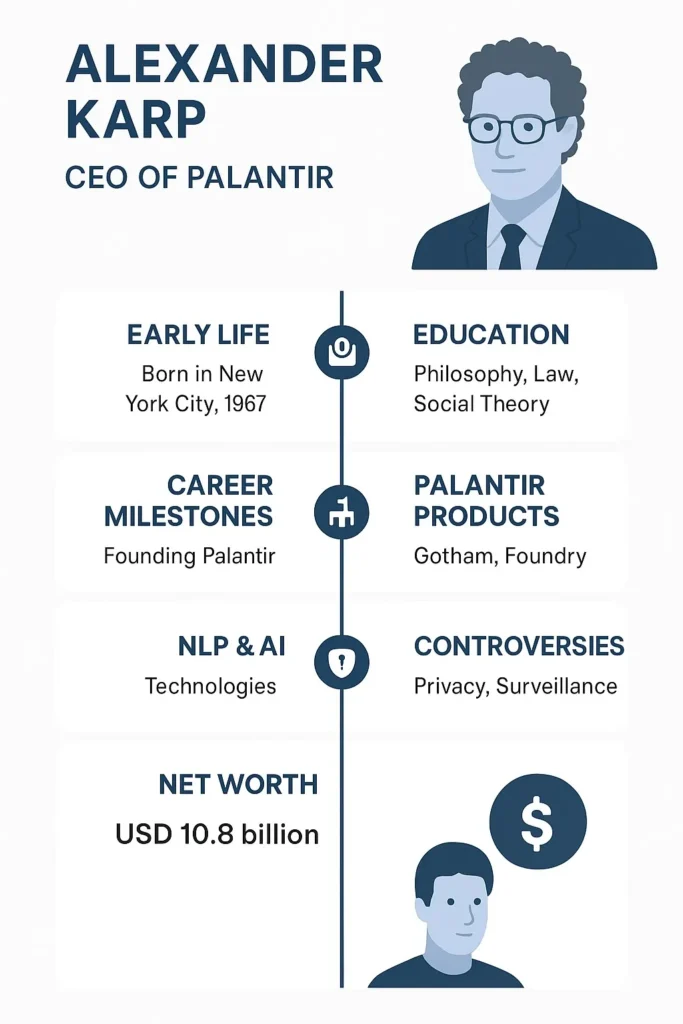

Early Life & Background A Conditioning Dataset

In data science, we often speak about the dataset that conditions a model. Karp’s early life is that dataset. Born October 2, 1967, in New York City and raised in Philadelphia, his upbringing blended activism, medicine, and the arts. His parents, his father a pediatrician, his mother an artist, created an environment with strong social signals about justice and civic engagement. Those signals shaped Karp’s priors: a sensitivity to institutional power, a focus on public policy problems, and an inclination toward structural analysis rather than tactical engineering.

Like a model trained on heterogeneous sources, Alex Karp’s identity was shaped by variability and contrast. He has spoken about dyslexia as a childhood learning difference that influenced how he processed information. From an early age, he was exposed to both protest culture and powerful institutions. These environments trained him to observe systems, incentives, and social dynamics at a deeper level. Karp also developed a lasting sense of being “othered,” which strengthened his independence of thought. Instead of approaching challenges only through tactics, he learned to solve problems through theory and abstraction. This habit made him a thinker who naturally looks for high-level patterns across messy realities. That pattern-first mindset later became central to how he framed Palantir’s purpose. Palantir’s mission reflects this worldview: turning complex, fragmented, heterogeneous data into interpretable insight. In effect, Karp’s early experiences helped shape a leader focused on converting messy operational logs into actionable signals.

Education & Philosophical Priors: formalizing the hypothesis space

Karp’s education set his hypothesis space. He studied philosophy at Haverford College (BA, 1989), then law at Stanford (JD, 1992), and later pursued a doctoral degree in social theory at Goethe University in Frankfurt. This trajectory is notable for what it doesn’t contain: formal computer science or statistical machine learning training. That absence mattered: it meant Karp treats computation as an instrument for social ends rather than as a purely technical puzzle.

From an NLP perspective, his background is analogous to having a strong background in symbolic semantics, discourse theory, and institutional analysis, all of which matter when designing systems that mediate meaning between human institutions and computational models. His doctoral work in social theory can be read as constructing top-level ontologies about power, governance, and legitimacy ontologies he later applied to how data infrastructures should be structured and governed.

Career Path from research to engineered production systems

Early career and investment signals

After the doctorate, Karp worked in research (Sigmund Freud Institute) and ran money management activities in London (Caedmon Group). In ML terms, this was a period of exploratory model building and capital acquisition: small experiments, private capital acting like hyperparameters that permitted larger-scale experiments later.

Founding Palantir: designing a mission-oriented pipeline

Palantir was co-founded in the early 2000s (2003/2004) with Peter Thiel and engineers who wanted to build infrastructure for turning operational chaos into a decision-level signal. If you map Palantir to an NLP pipeline, its core components are:

- Ingestion and normalization: Connectors that pull logs, documents, relational tables, geospatial feeds and convert them into interoperable formats.

- Entity extraction & resolution: Algorithms and logic for turning mentions into canonical entities (people, organizations, assets) across heterogeneous sources.

- Knowledge graph construction: Linking entities into graphs that make explicit relationships and enable graph analytics.

- Search & semantic querying: Enabling investigators to find relevant patterns via structured queries, faceted search, and semantic match.

- Human-in-the-loop curation: Workflows that let analysts vet, label, and refine outputs essential for high-assurance uses.

- Decision support & visualization: Dashboards and operational playbooks that convert analytic inferences into actions.

Palantir’s Technology Stack NLP primitives in an enterprise shell

Palantir’s flagship products Gotham for government and Foundry for commercial customers incorporate many practices familiar to NLP and applied ML engineers.

Data integration and schema mapping

Real-world enterprise data is messy. Palantir’s early competitive edge was its focus on data integration mapping disparate schemas, resolving inconsistent identifiers, and creating canonical representations. From an NLP angle, this is analogous to schema induction and alignment: building mappings from multiple text and table sources into a consolidated representation that downstream components can trust.

Information extraction & entity resolution

Key to intelligence and law enforcement use cases is the ability to extract mentions from documents and link them to identities. Palantir’s pipelines blend rule-based extraction, regular expressions, probabilistic matching, and manual curation. Hybrid systems combining deterministic rules with statistical fuzzy matchers are a hallmark of high-assurance NLP in regulated domains.

Knowledge graphs and graph analytics

Knowledge graphs are central. Graph structures capture relations such as “worked with,” “was_present_at,” or “owns.” Graph algorithms (shortest paths, community detection, centrality measures) provide inferential signals that conventional flat ML features cannot. Palantir’s tools let analysts build and explore these graphs interactively.

Vectorization & semantic search

In modern NLP, vector embeddings power semantic search and similarity queries. Palantir’s commercial offerings increasingly incorporate semantic representations (embeddings, nearest neighbor search, vector databases) for fuzzy matching across documents, emails, and logs. Embeddings make it possible to match semantically similar but lexically different content a practical requirement when sources use different jargon, languages, or abbreviations.

Human-in-the-loop and active learning

Palantir emphasizes analyst feedback loops. In NLP terms, Palantir orchestrates active Learning Cycles: humans label examples, models update, and the system deploys improved models with safeguards. For mission-critical use, this human supervision is non-negotiable: false positives and false negatives have real costs.

Explainability & audit trails

Government contracts demand explanations and auditability. Palantir’s platforms therefore include provenance tracking, which is analogous to model interpretability and traceability in NLP: every inference is tied back to source documents, extraction rules, and analyst decisions. This lineage is critical for legal compliance and internal oversight.

Operationalization and MLOps

Deploying models into the field requires continuous monitoring, retraining, and data drift detection. Palantir’s Foundry provides an MLOps layer for versioning models, tracking metrics, and orchestrating retraining with domain engineers in essence, production-grade ML pipelines required for NLP systems at scale.

Leadership & Product Philosophy priors, loss functions, and culture

Karp’s leadership can be described in computational metaphors:

- Strong prior: He holds firm priors about mission, national security, and the social role of technology. These priors shape product decisions and customer choice.

- Custom loss function: Palantir optimizes for mission impact and reliability rather than raw user-growth metrics. This loss function privileges accuracy, provenance, and alignment with government workflows over vanity metrics.

- Regularization via culture: Hiring people who conform to mission reduces variance in behavior across teams a kind of cultural regularization that keeps the company focused but arguably narrows viewpoints.

His personal discipline (fitness, meditation, tai chi) and emphasis on long-term thinking reflect a CEO optimizing for steady, robust performance rather than short term volatility.

Achievements & Technical Impact Viewed Through NLP

Karp’s major achievements overlap with technical contributions and strategic productization:

- Enterprise-grade data pipelines: Palantir built robust ingestion and integration systems that became reference architectures for mission-critical NLP and analytics.

- Operationalized knowledge graphs: Turning graph analytics into operational workflows is nontrivial; Palantir operationalized graph-driven decision making.

- Hybrid AI practice: Palantir exemplifies hybrid systems that combine deterministic symbolic logic with statistical learning a pragmatic model for regulated domains where pure deep learning alone is insufficient.

- Human-centered ML: Emphasizing human judgment in looped systems advanced a model for high-assurance NLP.

- Commercial expansion of mission tech: Bringing the same pipeline design to supply chain, manufacturing, and finance demonstrates technology transfer from government to enterprise.

Wealth, Compensation & Financial Signals

Karp’s wealth is primarily stock-based, reflecting the high valuation of Palantir shares. Public reporting shows large equity sales in recent years; compensation headlines (e.g., large awards in 2024) generate debate around executive pay in firms with sensitive public contracts. From a governance standpoint, compensation packages and insider sales are important signals for stakeholders assessing alignment and risk.

Personal Life Reframed the private model

Karp is private and disciplined. For engineers, his lifestyle can be framed as a runtime environment optimized for long-term productivity: structured routines (fitness, tai chi), sparse living spaces (minimalist homes), and focused attention all attributes aligning with a leader who favors deep work and slow, robust optimization.

Controversies & Risks NLP-centred analysis

Palantir’s work touches many of the central debates in applied NLP.

Surveillance, privacy, and dataset ethics

Extracting entities and linking them across datasets is inherently privacy-sensitive. Information extraction and entity resolution across surveillance data can enable intrusive inferences. The ethical questions echo classic dataset issues in NLP: provenance, consent, representational harms, and the risk of downstream misuse.

Bias, fairness & domain shift

Models trained on specific historical patterns may perpetuate systemic bias. When tools influence policing, immigration, or intelligence decisions, biased data or mis-specified labels produce harmful outcomes. Domain adaptation and robust evaluation are essential but often underemphasized in operational deployments.

Explainability and contestability

Decision systems require explainable outputs and avenues for contesting automated inferences. Palantir’s attention to provenance is positive, but critics ask whether transparency is sufficient when model internals and training sets are proprietary.

Dual-use & geopolitical concentration

Technologies that help defenders can also empower authoritarian actors. The global concentration of data infra and compute raises geopolitical risk: who controls model weights, data, and operational playbooks matters for how power is exercised.

Corporate ideology and stakeholder alignment

Karp’s public statements about culture and meritocracy color how employees and clients perceive Palantir. Some critics view his “anti-woke” posture as politicized corporate governance, which can affect recruitment, public perception, and the company’s ability to attract diverse perspectives a key ingredient in robust model design and fairness testing.

Views on AI: skepticism, industrialism, and signal vs. noise

Karp has argued that much AI is “marketing fluff” — that many models are demonstrations rather than mission-critical systems. Interpreted in NLP terms:

- He distinguishes industrial AI (models integrated with reliable data pipelines, human oversight, and governance) from Consumer AI (apps built for engagement).

- He emphasizes value density: models must produce measurable operational lift to justify cost.

- He highlights governance: expensive models without provenance, evaluation, and robust human oversight are risky.

Karp’s public positions on large language models (LLMs) are pragmatic: LLMs offer utility but must be integrated into workflows with provenance, verification, and domain-specific constraints otherwise they risk hallucination and misuse.

Lessons for Practitioners: engineering, governance, and ethics

For NLP engineers, product managers, and policymakers, Karp’s story offers concrete lessons:

- Build pipelines, not demos: Robust extract-transform-load (ETL), entity resolution, and schema mapping are more valuable for operations than flashy model demos.

- Hybrid systems win in regulated domains: Combine symbolic rules, deterministic logic, and statistical models; use human experts to close the loop.

- Provenance is a first-class citizen: Track sources, chain of custody, and the lineage of every inference.

- Invest in evaluation under distributional shift: Test models not just on historical accuracy but on robustness to new adversarial or shifted conditions.

- Design for contestability: Citizens and stakeholders should be able to inspect and contest automated inferences.

- Balance mission focus with diverse epistemic communities: A tight mission helps execution but broad epistemic diversity helps catch blind spots and reduce systemic risk.

Timeline

| Year | Event (NLP framing) |

| 1967 | Born foundational priors established. |

| 1985 | High school graduation early training data completed. |

| 1989 | BA in Philosophy symbolic reasoning & ontology formation. |

| 1992 | JD, Stanford institutional knowledge & legal priors. |

| 2002 | PhD in social theory formal models of power and governance. |

| 2003/2004 | Co-founds Palantir builds operational pipelines for instrumentation and inference. |

| 2020 | Direct listing company’s model deployed to public markets. |

| 2024–2026 | High-profile compensation and stock activity; intensified public debate about mission and governance. |

FAQs

A: He is the co-founder and CEO of Palantir Technologies, a company that creates platforms to analyze large, heterogeneous data for governments and enterprises.

A: Karp holds a BA in Philosophy (Haverford College), a JD from Stanford Law School, and a PhD in social theory from Goethe University in Frankfurt.

A: Most of his wealth comes from equity in Palantir. He owns significant shares and has periodically sold stock, reflecting the high valuation of the company.

A: Controversies include Palantir’s association with surveillance and law enforcement, debates over privacy and civil liberties, Karp’s outspoken cultural positions, and scrutiny around executive compensation.

A: He differentiates industrial, mission-critical AI from marketing hype. He stresses governance, explainability, and measurable operational value particularly for defense and national security.

Conclusion

Alexander Karp is a leader whose intellectual priors shaped by philosophy, law, and social theory have resulted in a company that treats data infrastructure as an instrument of governance. Through the vocabulary of NLP and applied ML, we can understand Palantir’s strengths (robust ingestion, entity resolution, knowledge graphs, human-in-the-loop systems, provenance) and its risks (surveillance potential, bias propagation, proprietary opacity). Karp’s insistence on mission, discipline, and long-term orientation explains Palantir’s focus on industrial, high-assurance AI rather than consumer dalliances.

For engineers and policymakers, the takeaway is pragmatic: design systems that prioritize provenance, auditability, robust evaluation under distributional shift, and mechanisms for contestability. The future of NLP in governance will require blending symbolic structure with statistical learning, embedding Human Judgment where the cost of error is intolerable, and building governance frameworks that make powerful inference systems accountable and

contestable.