Introduction

Edwin Chen founded Surge AI at a pivotal time in the development of modern natural language systems. By 2020–2023, compute budgets and model architectures had advanced rapidly, but many teams discovered that model performance and safety were bottlenecked by the quality of labeled data used for training, fine-tuning, and evaluation. Chen’s core insight was pragmatic and product-focused: treat labeling as a high-skill engineering problem not a low-cost commodity. He systematized expert annotation, multi-stage QA, and repeatable annotation pipelines so that organizations building models could rely on consistent, auditable datasets for tasks like RLHF (reinforcement learning from human feedback), safety evaluation, and fine-grained content moderation.

From an NLP standpoint, that focus on annotation quality label schemas, inter-annotator agreement, disagreement resolution, provenance, and continuous dataset maintenance is what made Surge critical to downstream model behavior. The company’s early traction and subsequent revenue expansion turned a “data problem” into a durable business, creating a template other vendors have tried to copy.

Quick Facts

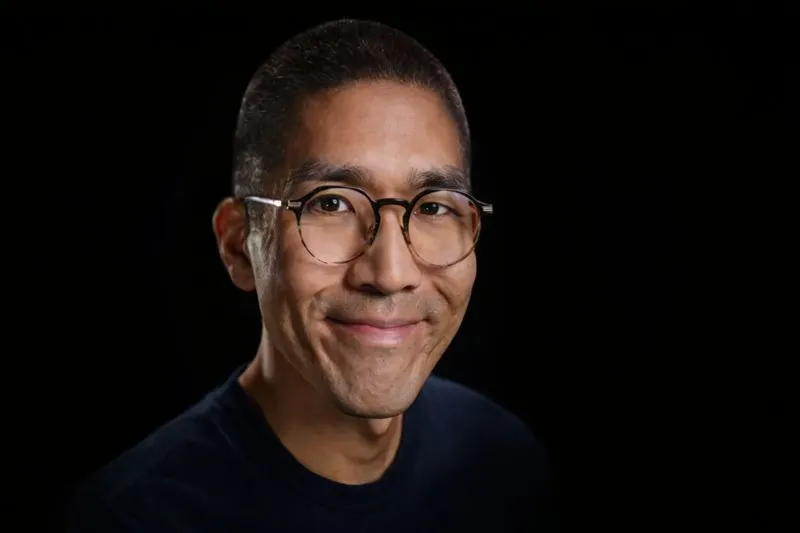

- Full name: Edwin Chen.

- Role: Founder & CEO of Surge AI.

- Education: Studied mathematics, computer science and linguistics at MIT (public profiles).

- Founding year: Surge AI was founded around 2020.

- Revenue (2024): Reported over $1 billion in full-year 2024. (See Inc.)

- Recognitions: Included in TIME100 AI (2026).

- Background: Early engineering roles in large technology companies before founding Surge. (See Wikipedia / public profiles.)

Childhood and Education The Quiet Start

Public material about Edwin Chen’s childhood is sparse; he’s kept a relatively private personal life. What is documented is his academic training:

- Chen attended MIT, where his coursework and interests combined mathematics, computing, and linguistics precisely the triad that maps well onto modern NLP and applied ML work. That background provides plausible grounding for his later focus on linguistically informed annotation and robust dataset design.

Those disciplines (mathematics for formal reasoning, computer science for systems and tooling, linguistics for annotation schemes and semantics) are a practical foundation for building tools that produce high-quality language data. In other words, Chen’s education likely influenced both the technical approach and product language Surge uses with clients.

From Big Tech Engineer to Founder

Early career systems, scaling, and product lessons

Before launching Surge, Chen worked as an engineer inside larger technology organizations. That experience exposes future founders to large-scale production systems, reliability engineering, and the expectations of enterprise customers. Crucially, it also reveals friction points in this case, that teams with advanced models still struggled to obtain reliable labeled data for fine-grained tasks.

Engineers who later become founders often use that domain knowledge to productize internal solutions that previously existed only as bespoke workflows. For Chen, the problem he productized was the reliability, provenance, and auditability of annotation workflows.

The core idea: fix the data problem

The core business hypothesis behind Surge was straightforward: many machine learning teams had access to compute and model architectures, but lacked consistently accurate, auditable labels especially for specialized, safety-critical, or high-stakes annotation tasks. Labels were inconsistent, annotation instructions were underspecified, and quality control was ad hoc. Chen saw an opportunity to build a vendor that optimized for annotation fidelity rather than low cost one that could deliver datasets suitable for safety evals, RLHF training, and regulated contexts.

Surge launched (around 2020) to give customers a repeatable pathway from problem definition to a production-ready dataset.

What Surge AI Does An NLP-centered explanation

In practical terms, Surge provides three technical building blocks that matter to NLP teams:

- Expert annotators + human-in-the-loop workflows. Surge sources people with domain expertise when necessary (e.g., clinicians for medical labeling, legal reviewers for policy work). Annotation can be task-specific: preference labels for RLHF, safety classification, nuanced content moderation, or linguistic phenomena tagging.

- Tooling and multi-stage QA pipelines. Annotation platforms are only as valuable as their quality assurance cross-checks, adjudication layers, consensus algorithms, and disagreement analytics (e.g., measuring inter-annotator agreement metrics such as Krippendorff’s alpha or Cohen’s kappa). Surge emphasizes repeatable QA that reduces annotation noise.

- Outcome-based delivery and dataset maintenance. Rather than billing solely on per-label labor, Surge often structures engagements around an outcome: a dataset that meets pre-agreed quality metrics and evaluation criteria. They also offer ongoing maintenance and re-annotation so datasets remain relevant as product requirements change.

Revenue comes from building datasets, continuous dataset upkeep, and advisory/consulting on annotation design and model evaluation.

Why Surge Chose Quality Over Price product + economic rationale

Many annotation providers compete primarily on labor-cost and scale. Surge differentiated on precision, reproducibility, and trust. For complex annotation tasks safety, RLHF, and domain-specific labeling the cheaper solution often proves false economy because low-quality labels can degrade model performance, introduce bias, or create safety failures. Surge’s model charges a premium for datasets that demonstrably reduce model errors on targeted evaluation suites.

From a business economics view, outcome-based pricing enables higher margins and makes it easier to serve customers with compliance or safety constraints. From an NLP perspective, it means Investing more in clear label definitions, annotator training, and multi-pass adjudication: elements that increase label validity and reliability.

The $1B+ Milestone scale without compromise?

In reporting from late 2024, Surge was described as generating over $1 billion in revenue for the full year. That figure drew attention because it suggested that a company focused on premium annotation could scale to very large revenues without following the same playbook as high-volume, low-cost labelers. Surge’s growth narrative also emphasized a degree of operational discipline: standardized processes, repeatable product offerings, and enterprise-level SLAs (service level agreements).

Whether that revenue came primarily from a few large enterprise contracts, recurring maintenance subscriptions, or broad client diversification is a detail for financial reporting; the headline is that the company demonstrated that premium labeling can be highly monetizable in a market where model builders value reliability and trust.

How Surge’s Pipeline Works an operational sketch for NLP teams

A reproducible dataset pipeline matters for downstream model behavior. Surge’s typical workflow can be summarized as:

- Discovery & problem framing: Define the downstream metric (e.g., reduce toxic outputs by X%), task taxonomy, edge cases, and evaluation procedures.

- Instruction authoring & pilot labeling: Create precise annotation guidelines and run small pilots to measure initial agreement and surface ambiguities.

- Annotator recruitment & training: Source domain experts or trained annotators, run calibration sessions, and update documentation.

- Full annotation & multi-pass QA: Use adjudication and consensus strategies; include bias detection and provenance logging.

- Evaluation & delivery: Deliver datasets with baseline model evaluations showing how the dataset impacts model outputs; include audit trails and provenance metadata.

- Maintenance & iteration: Re-annotate or expand datasets as needs evolve; continuously monitor model drift and label stability.

This flow turns bespoke annotation projects into repeatable product offerings that enterprise customers can buy with confidence.

Comparison: Surge AI vs. Scale AI vs. Label box

| Feature / Dimension | Surge AI | Scale AI | Labelbox |

| Main focus | High-quality, expert labeling for RLHF & safety | Large-scale labeling & enterprise services | Annotation platform (self-serve + managed) |

| Typical clients | AI labs, safety teams, regulated enterprises | Broad enterprise ML teams requiring throughput | ML teams wanting flexible tooling & integrations |

| Pricing | Outcome-based, premium | Managed services + platform pricing | Subscription + usage |

| Best at | Complex, sensitive annotation tasks | Massive scale & throughput for labeled data | Flexible tooling and integration into dev workflows |

| Scalability | High for quality tasks (with longer lead times) | Very high for volume tasks | Scales with adoption and team usage |

Use this table as a quick procurement heuristic: choose Surge for high-stakes or safety-critical labeling, Scale when you need massive throughput, and Labelbox when you want a platform to self-manage annotation.

Leadership and Culture how Edwin runs engineering and product

Edwin Chen has publicly emphasized a few leadership themes:

- Engineer-first product decisions in the early stages. He has expressed skepticism about hiring too many specialized PMs or non-engineering roles early on, preferring tight product feedback loops driven by engineers. That approach shaped early-stage hiring and tooling choices at Surge.

- Efficiency and leverage. Chen has commented on maximizing engineer leverage and the role of tooling in multiplying impact a perspective that dovetails with Surge’s tooling investments to scale quality annotation without bloating headcount.

- Pragmatism over theory. The culture leans toward measurable outcomes and pragmatic QA metrics rather than abstract processes for its own sake.

These cultural decisions influence how Surge structures teams (engineering + annotation operations), how it builds its annotation platform, and the speed at which it iterates on client workflows.

Ethical Questions & Criticisms what to watch for

Any company that depends on human annotation faces recurring ethical questions. The most salient concerns include:

- Worker pay and conditions. Are annotators compensated fairly? How are they onboarded, supported, and protected from emotionally challenging content? Ask vendors for compensation policies, turnover rates, and mental health supports.

- Data provenance and consent. From where does the raw data originate? Were rights and permissions obtained? Provenance metadata and documentation are crucial when datasets include personal data or copyrighted content.

- Downstream harms and label bias. Incorrect or biased labels create model biases and safety failures. Vendors should provide audit reports and bias-detection analyses.

- Transparency and traceability. Buyers should demand provenance logs, inter-annotator agreement metrics, and clear records of instruction changes or adjudication outcomes.

These are industry-wide questions, not unique to any single vendor, and the strongest vendors respond with detailed audits, worker policies, and reproducible provenance metadata.

Net Worth & Financial Picture cautious accounting

- Reported company revenue (2024): Over $1B. (Industry reporting.)

- Valuation & founder ownership: Private details are not fully public. Estimating Edwin Chen’s personal net worth requires assumptions about ownership percentage and company valuation; public estimates should be treated as speculative.

In short, Surge’s scale likely created significant founder wealth, but exact personal net worth figures are private and should be interpreted cautiously.

Timeline key dates and events

| Year | Event |

| ~1988 | Edwin Chen estimated birth year (approximate). |

| 2006–2010 | MIT studies in mathematics, CS, linguistics (public profiles). |

| 2010s | Engineering roles in larger tech firms (public profiles). |

| 2020 | Founded Surge AI (approximate founding year). |

| 2023–2024 | Rapid growth and wide enterprise adoption. |

| 2024 | The company reportedly generated > $1B in revenue (reported). |

| 2026 | Named to TIME100 AI list (recognition). |

Pros & Cons

Pros

- High-quality outputs that reduce downstream model failure rates on targeted evaluation suites.

- Outcome-based pricing aligns vendor incentives with client outcomes.

- Strong client trust for safety-critical projects and regulatory-compliant workflows.

Cons

- Higher cost compared to commodity labeling not suitable for low-stakes, high-volume tasks.

- Skilled annotator scarcity recruiting and retaining domain experts is a constraint.

- Ethics and transparency scrutiny buyers will demand provenance and worker pay disclosures.

FAQs

A: Edwin Chen is the founder and CEO of Surge AI, a company that provides high-quality labeled data and human-in-the-loop tooling for AI teams and safety work.

A: Industry reporting indicates Surge generated over $1 billion in revenue in 2024, positioning it among the largest vendors in the data-labeling sector.

A: Surge’s early growth narrative emphasized bootstrapping and profitable operations in some reports; there have also been reports around later-stage fundraising interest. Specific fundraising history should be confirmed via company disclosures and reporting.

A: Surge emphasizes expert annotation, reproducible QA pipelines, and outcome-based delivery rather than low-cost volume labeling.

A: Yes. Key concerns include worker pay, data provenance, consent, and downstream bias. Best practice is to ask vendors for audits, pay policies, and provenance metadata.

Conclusion

Edwin Chen and Surge AI demonstrate that the often-underappreciated work of labeling and dataset curation is a large, valuable, and high-leverage component of the modern AI stack. By prioritizing annotation fidelity, reproducible QA, and outcome-driven offerings, Surge converted a practical engineering capability into a high-margin Enterprise Business. That success also surfaces industry-wide questions about worker treatment, provenance, and bias issues any responsible buyer must audit before deployment.